I made a TTS Discord bot that uses your own voice

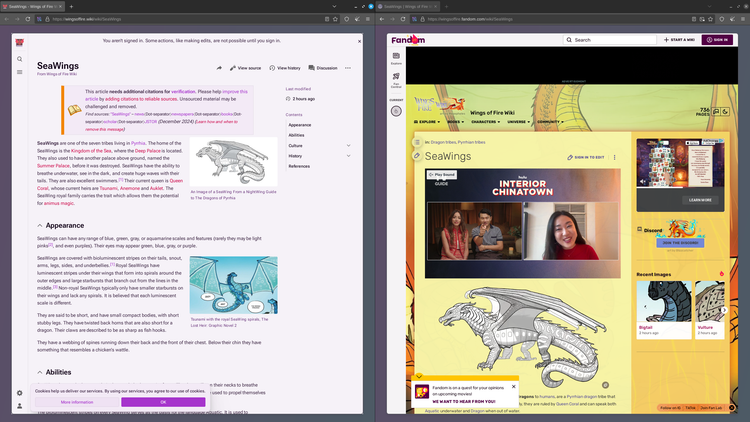

I have a group of friends that I like to talk with frequently. Almost every day, I get the opportunity to hop in a voice call on Discord and just hang out with them. It has become just part of our traditions.

Because this tradition is held so dearly, sometimes someone will join the call even when they aren't able to speak or in a setting where it would be awkward to speak out loud. We have a text channel dedicated to text and image communication during voice calls, but it can be difficult to be part of the conversation when you don't have a voice.

For a while, we used a commonly available TTS bot. A mute user can run a command with their message, and the TTS bot would read out the name of the user and their message in the voice call. This is fine, but I thought I could do better.

The problem with a bot like this is that it's not easy to tell who is speaking. The bot does announce the speaker at the start, but if you aren't paying attention at the start, you need to check the text channel to verify who sent the message.

At some point, someone made a little joke about making a TTS bot that used our own voices. So I did that.

Two years ago, I created Dreamspeaker, a TTS bot in Python that uses a machine-learning voice synthesis model to generate speech that recreates another voice. When a user sends a TTS command, the bot fetches their reference clip and generates the speech based on their own voice. Take a listen!

It's certainly not perfect, but it works well enough that I can tell who's speaking without having to think about it. There are better models out there that I could use, but I wanted to make this bot fast. This is going to be used in a real-time voice call, and typing a complete thought before it gets spoken is already way slower than just speaking, so I wanted to keep the processing times low. On my RTX 4070 Ti system, that clip you just heard took just under a second to generate.

The goal isn't to perfectly synthesize someone's voice, anyway. The purpose of this project is to make it easier for mute users to be part of the conversation, and over the past two years, I feel that it has succeeded at that.

My friends were a little freaked out by it at first (this was before mainstream AI video generation) but I'm glad to say that Dreamspeaker has been accepted as a useful (and perhaps silly) tool within our group.

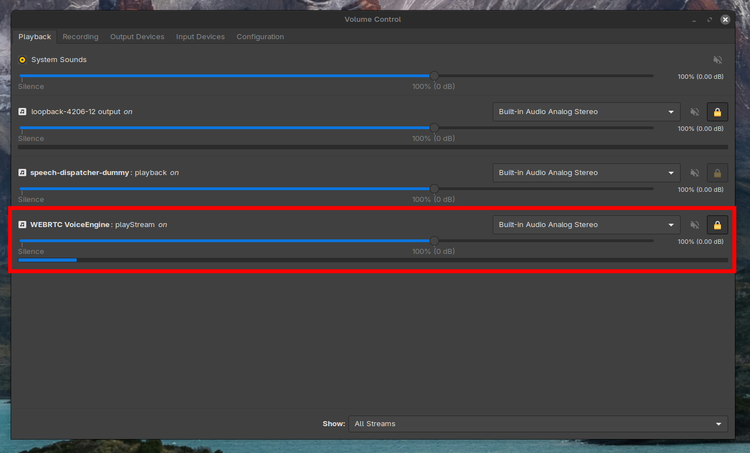

Dreamspeaker uses the XTTS v2 model from CoquiTTS. Coqui as an organization ended up failing, but their TTS system is still available on GitHub and is very functional. All the actual synthesis and generation comes from Coqui. All I've done is wrapped it up in a Discord bot.

I won't be making the bot public for the sake of my personal computer, but if you want to run it yourself, I have the code licensed MIT on a GitHub repository. All the instructions for setup are located there as well.

Member discussion